Neural networks are a paradigm rather than just a single algorithm.

They originally resembled human neurons in that they received an input, then output a signal only if a threshold was met. This is an all-or-nothing process: either the neuron fires a transmitter or it does not. The paradigm was extended dramatically by modern computation power and now allows for massively complicated binary decision problems to be performed.

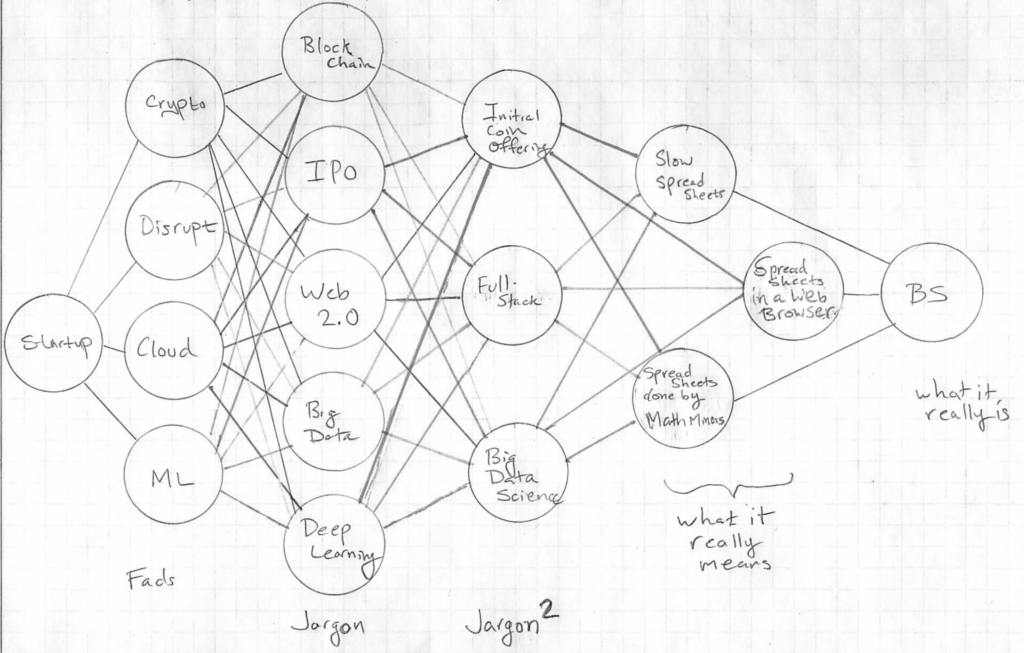

Although many neural networks have a ton of arcane connections and “hidden layers” (hidden only in that displaying them all is tedious), I have hand-drawn an example. This is a fully working neural network. My inspiration comes from participating in YCombinator’s Startup School over this summer. It solves the problem many of us keep running into: how to rapidly tell if a startup is BS (a bad startup).

This is a functional neural network. It takes in a set of words and decides if a start-up venture is a “bad startup” or not. It does not say whether the start-up is good; just is if it is a “bad startup.” At each layer, a series of keywords are in circles; if a sufficient number of these nodes get activated, then the neural network tells you that the idea is BS.

So, for example, if a startup is going to disrupt the cloud using crypto, and has a web 2.0 big data blockchain, you can assume their initial coin-offering, despite being full-stack, is really just a slow spreadsheet done in a browser by math minors. That’s BS man!

Many thanks to William Gasarch at UMD for inspiring this.